How to Download Files on Linux Using Terminal

Whenever you want to download any file from the internet, typically, you launch your browser, navigate to the target file and download it using the browser. But have you ever thought of downloading a file from the terminal using commands? In this post, I will show you two methods to download files on Linux using the terminal.

The two main methods for downloading files from the terminal on Linux are the wget and curl commands. Other options can be used for special purposes, but these commands are usually sufficient.

To help you understand each command better, I will first show you the basic syntax and the most important options for each command, and then finish with real-world use cases and bonus tips.

The wget Command

The wget command is one of the most popular utilities used for downloading files from the internet using the terminal. It supports various network protocols including HTTP, HTTPS, FTP, etc. To download a file using this utility, type the wget command followed by the target URL.

Here is the basic syntax:wget [options] [URL]

Installing wget

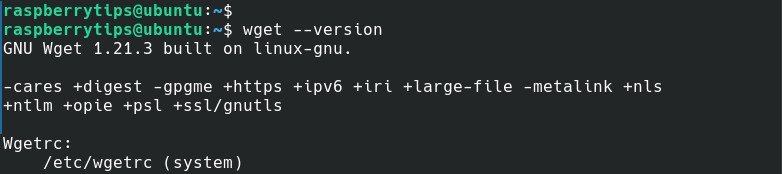

The wget command comes pre-installed on most Linux distributions. You can verify that using the command below:wget --version

Use the commands below depending on your distribution, if it isn’t installed:

- Debian-based Distributions (e.g., Debian, Ubuntu, Linux Mint):

sudo apt update

sudo apt install wget - RHEL-based Distributions (e.g., Red Hat Enterprise Linux, CentOS, Fedora):

sudo yum install wgetOr,sudo dnf install wget - Arch Linux-based Distributions (e.g., Arch Linux, Manjaro):

sudo pacman -Sy wget

If you’re new to the Linux command line, this article will give you the most important Linux commands to know, plus a free downloadable cheat sheet to keep handy.

Main Options

When you look at the wget command syntax provided above, you will see the [options] parameter. Now, let’s look at some options available with the wget command.

- -O: This option specifies the output filename of the file you are downloading.

For example, -O imageOne.png will save the downloaded file as imageOne.png. - -q, –quiet: Turns off the output messages, making wget operate quietly.

- -c, –continue: Resumes a partially downloaded file.

- -r, –recursive: Downloads files recursively, i.e., downloads linked resources such as images, CSS files, etc., from the specified URL.

- -np, –no-parent: Restricts wget to only download files from the specified directory and not ascend to the parent directory.

- -P , –directory-prefix: This option saves all your downloaded files to a specified directory. For example, -P /path/to/save will save the downloaded files to /path/to/save.

- -i , –input-file=: This option enables you to download multiple URLs listed in a file.

Use the command below to view more options available for the wget command:wget --help

Wget Command Examples

Let us look at real-world use cases of the wget command.

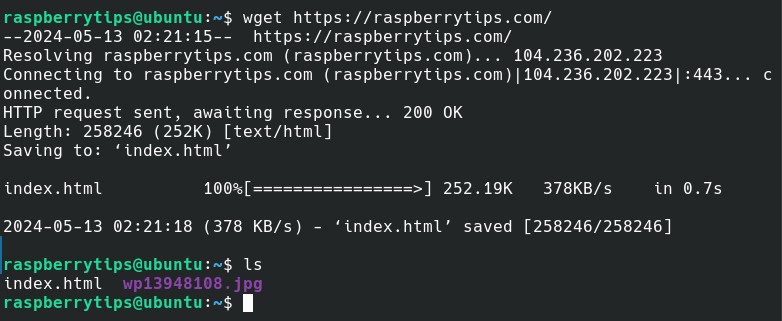

Download a file using wget

To download a file or web page, call the wget command and pass the file or webpage’s URL. It will download the file to your current working directory.

Download your exclusive free PDF containing the most useful Linux commands to elevate your skills!

Download now

Take a look at the examples below:wget https://wallpapercave.com/wp/wp13948108.jpg

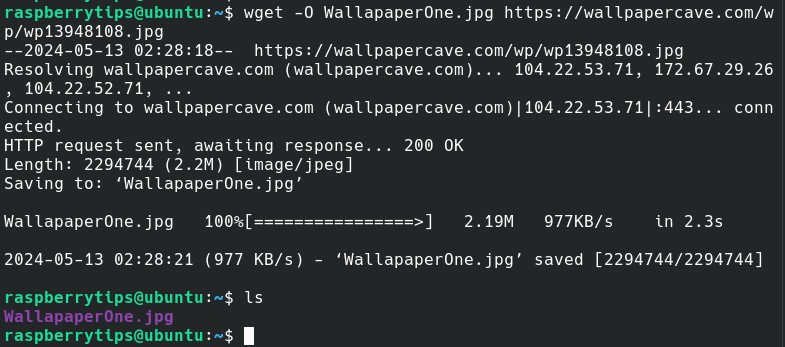

Download files with a different name using wget

To download a file and save it with a different filename, use the -O option. In the example below, I downloaded a random wallpaper from Wallpapercave website and saved it with the filename “WallpaperOne.jpg”.

wget -O WallpaperOne.jpg https://wallpapercave.com/wp/wp13948108.jpg

Download a folder using wget

To download an entire folder, use the -r option (recursive). It will allow you to download the folder with all of its contents. However, there are other options that I did not list above that we will need but first let us have a look at the command.

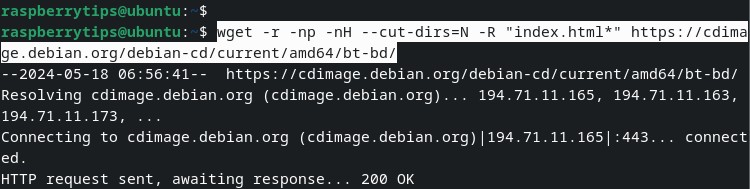

wget -r -np -nH --cut-dirs=3 -R "index.html*" https://cdimage.debian.org/debian-cd/current/amd64/bt-bd/

This command aims to download the bt-bd folder that you can see at the end of the URL. Let us have a quick look at the options used:

- -r: This option enables us to download the files recursively.

- -np: No parent; This option ensures wget does not ascend to the parent directory.

- -nH: No host directories; This option prevents creating a directory named after the hostname.

- –cut-dirs=3: This option specifies the number of directories we are skipping. In our case it only 3 which are debian-cd, current and amd64. You can see these directories in the URL.

- -R “index.html*”: The -R option specifies the files you want to omit. In my case, I did not want to download any index.html files.

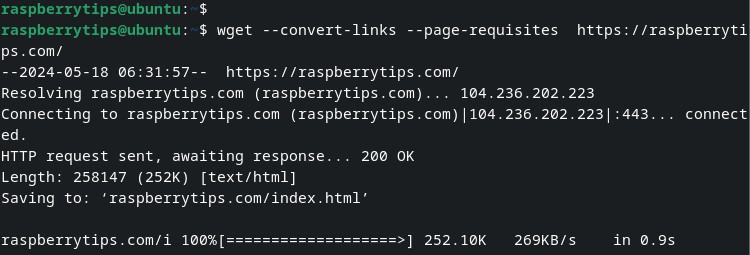

Download an entire website using wget

You can also use wget to download an entire website. It’s like creating a mirror of the website that you are currently viewing. That includes all the images, style sheets, pages etc.

There are two options that we will need to achieve this:

- –convert-links: This option converts all links in the web page to point to the downloaded website instead of the live website online.

- –page-requisites: This option allows downloading of other page elements like style sheets which will give the downloaded website a similar look to the live website.

wget --convert-links --page-requisites https://raspberrytips.com/

When I opened the download website, all the content available on the live website was present. However, there appeared to be an issue with the design. This likely indicates that the site relies on styling provided online by a CDN or similar service, which was not downloaded.

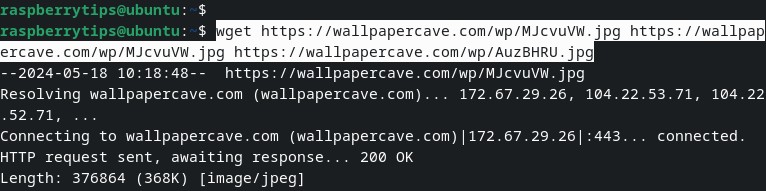

Downloading Multiple Files

You can also download multiple files with wget. Now, there are two ways which you can use to achieve that:

- Add all download links in a single wget command.

- Add the download links in a text file.

Let’s start with the first option. This is easy when you have a few files you want to download. You call the wget command and paste all your links.

wget https://wallpapercave.com/wp/MJcvuVW.jpg https://wallpapercave.com/wp/MJcvuVW.jpg https://wallpapercave.com/wp/AuzBHRU.jpg

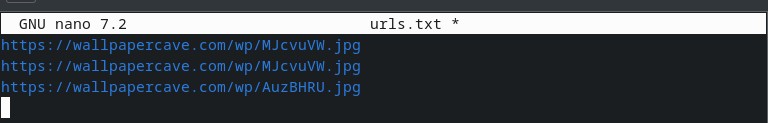

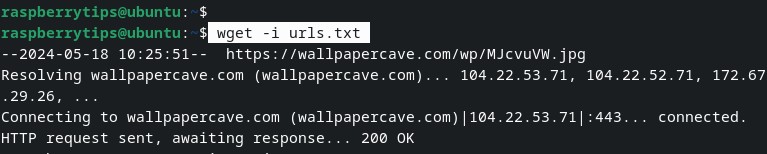

The other option is to save the URLs in a text file. Follow the steps below:

- Create a text file using the nano editor:

nano urls.txt - Paste you links:

- Call the wget command and use the -i option to pass the text file as shown below:

wget -i urls.txt

Tip: Resume an interrupted download using Wget

If you interrupted a download process maybe by pressing Ctrl + C on the terminal, you can still resume downloading your files using the command below:

wget -c

Download your exclusive free PDF containing the most useful Linux commands to elevate your skills!

Download now

The Curl Command

Curl (Client URL) is a popular Linux command-line utility, used for various purposes including data transfer and checking URLs.

For this post, I will focus on how to use Curl to download files from your Linux terminal.

The general syntax is:curl [options] [URL]

Installing curl

Despite Curl being a very popular Linux command, it doesn’t come pre-installed on most Linux distributions, including Debian. Below are the commands for installing the Curl utility for different Linux distributions.

- Debian-based Distributions (e.g., Debian, Ubuntu, Linux Mint):

sudo apt update

sudo apt install curl - RHEL-based Distributions (e.g., Red Hat Enterprise Linux, CentOS, Fedora):

sudo yum install curlOr,sudo dnf install curl - Arch Linux-based Distributions (e.g., Arch Linux, Manjaro):

sudo pacman -Sy curl

Main Options

As I said earlier, Curl is a powerful command that’s used for various purposes including API testing. Therefore, it supports very many options. However, we will only look at the few options you need for downloading files.

- -O: Save a downloaded file with the original name.

- -o [filename]: This option will save a file with the specified name.

- -s: Enable silent mode so nothing will be displayed on the terminal.

- -sS: This option is similar to the silent mode (-s) but it will display any arising errors on the terminal.

- -v: Verbose mode. This option enables Curl to display detailed information about the download.

Curl Command Examples

Let us look at a few real-world examples that you can use to download files with Curl on your Linux terminal

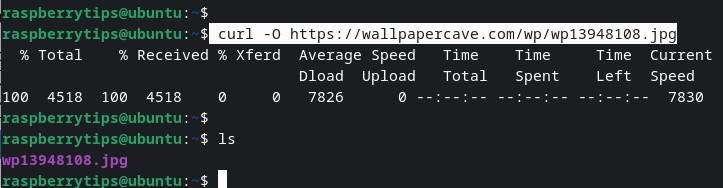

Download a single file with Curl

To download a file with the Curl command, add the URL of the file you want to download.

I use the -O option to ensure the file is saved with its original file name.

curl -O https://wallpapercave.com/wp/wp13948108.jpg

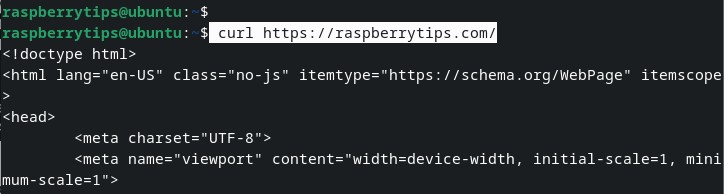

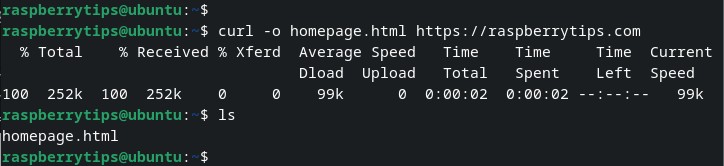

Download a web page using Curl

Now, you might think that for this option we only need to call the Curl command and pass the web page’s URL. That won’t work because Curl only reads the web page and displays the output on the terminal.

You will need to use the -o and specify a filename where you want to save the contents of the web page:curl -o homepage.html https://raspberrytips.com

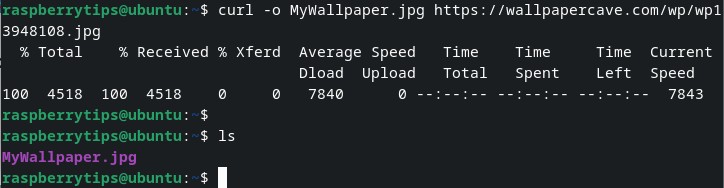

Download files with a different name

I have already hinted to you about this in the previous example. You will need to use the -o option followed by the name you want to specify:curl -o MyWallpaper.jpg https://wallpapercave.com/wp/wp13948108.jpg

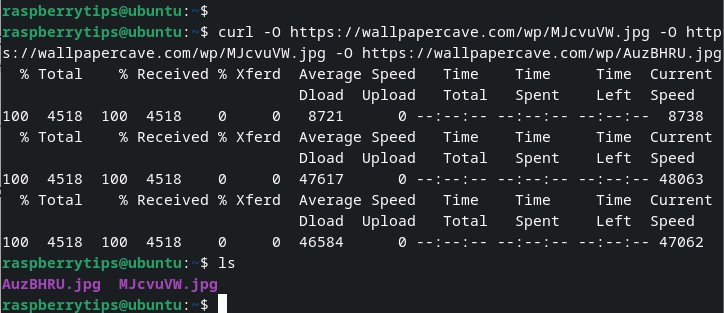

Download multiple files with Curl

Downloading multiple files is pretty hectic compared to using the wget command. With Wget, you only need to pass the URLs of the files you wish to download. However, with Curl, you must add a -O option next to each URL. See below.

curl -O https://wallpapercave.com/wp/MJcvuVW.jpg -O https://wallpapercave.com/wp/MJcvuVW.jpg -O https://wallpapercave.com/wp/AuzBHRU.jpg

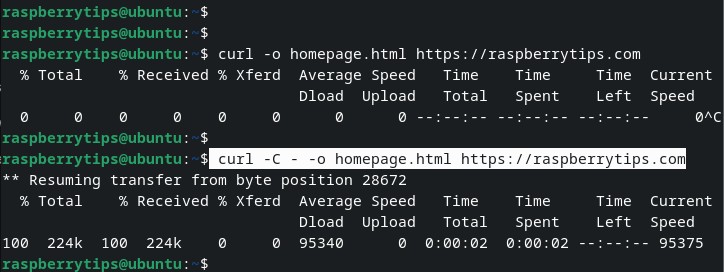

Pause and resume download with curl

To pause a download when using Curl, press Ctrl + C on your keyboard. To resume a download, execute the same command, but now with the -C option.

curl -o homepage.html https://raspberrytips.com

I paused the above download and had to resume using the command below:

curl -C - -o homepage.html https://raspberrytips.com

Bash Scripts

Like many other Linux command-line utilities, you can use both wget and curl in your scripts to perform more advanced tasks.

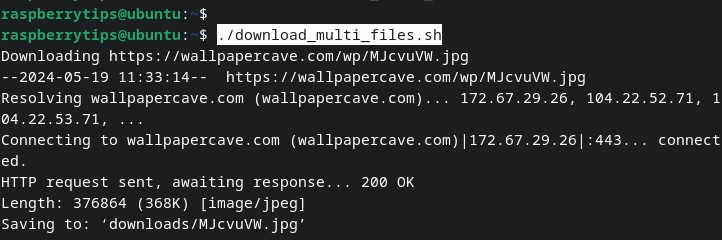

Using wget in Scripts to Download Multiple Files

Launch the terminal and follow the steps below:

- Create an empty script file using the Nano editor or Vim.

In my case, I will use Nano:nano download_multi_files.sh - Paste the code below. I have explained what every line does.

# !/bin/bash

#List of URLs to download

urls=(

"https://wallpapercave.com/wp/MJcvuVW.jpg"

"https://wallpapercave.com/wp/MJcvuVW.jpg"

"https://wallpapercave.com/wp/AuzBHRU.jpg"

)

#Directory to save downloaded files

output_dir="downloads"

#Create the directory if it doesn't exist

mkdir -p "$output_dir"

#Loop through each URL and download the file

for url in "${urls[@]}"; do

echo "Downloading $url"

wget -c -P "$output_dir" "$url"

done - Save the file (Ctrl + S) and exit (Ctrl + X).

- Make the file executable using the chmod command as shown below:

chmod +x download_multi_files.sh - You can now run the script as shown below:

./download_multi_files.sh

Using Curl in Scripts to Download Multiple Files

I will modify the script above to use Curl instead of wget. I will also introduce another Curl option that didn’t look at before. That is the –limit-rate option which enables us to limit the download speed.

# !/bin/bash

#List of URLs to download

urls=(

"https://wallpapercave.com/wp/MJcvuVW.jpg"

"https://wallpapercave.com/wp/MJcvuVW.jpg"

"https://wallpapercave.com/wp/AuzBHRU.jpg"

)

#Directory to save downloaded files

output_dir="downloads"

#Create the directory if it doesn't exist

mkdir -p "$output_dir"

#Loop through each URL and download the file. Limit download speed to 100KB/s

for url in "${urls[@]}"; do

echo "Downloading $url"

curl -C - --limit-rate 100k -O "$url"

done

Download your exclusive free PDF containing the most useful Linux commands to elevate your skills!

Download now

Additional Tips

Up to this point, I believe you now have a good understanding of using the wget and curl command to download files via the terminal.

But did you also know there is a command-line tool for downloading YouTube, Vimeo and Dailymotion videos? Let’s have a quick look at this utility – Youtube-dl.

Youtube-dl (yt-dlp)

Youtube-dl is a command-line utility developed using Python. It is used to download YouTube videos on your system. For this post, we will install yt-dlp which is a fork of Youtube-dl. Youtube-dl currently seems to have issues downloading some videos.

Follow the steps below to get started with yt-dlp:

- Install Python3-pip and yt-dlp using the commands below:

sudo apt install python3-pip

pip3 install yt-dlp - After a successful installation, you can now download YouTube videos using yt-dlp as shown below.

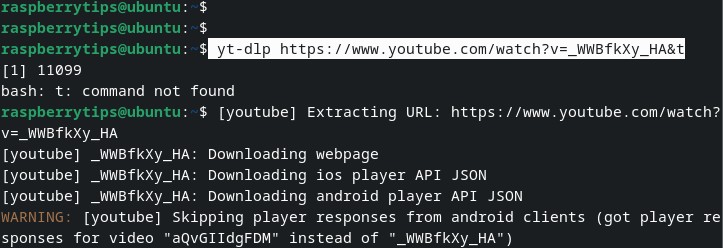

yt-dlp https://www.youtube.com/watch?v=_WWBfkXy_HA&t

You will notice that yt-dlp executes uniquely. From the image above, you can see it starts by telling me “command not found” and then proceeds to download the video.

Download your exclusive free PDF containing the most useful Linux commands to elevate your skills!

Download now

Want to chat with other Raspberry Pi enthusiasts? Join the community, share your current projects and ask for help directly in the forums.

Additional Resources

Overwhelmed with Linux commands?

My e-book, “Master Linux Commands”, is your essential guide to mastering the terminal. Get practical tips, real-world examples, and a bonus cheat sheet to keep by your side.

Grab your copy now.

VIP Community

If you just want to hang out with me and other Linux fans, you can also join the community. I share exclusive tutorials and behind-the-scenes content there. Premium members can also visit the website without ads.

More details here.

Need help building something with Python?

Python is a great language to get started with programming on any Linux computer.

Learn the essentials, step-by-step, without losing time understanding useless concepts.

Get the e-book now.